The Hyper Membrane

Author:

Ricardo O’Nascimento

www.onascimento.com

www.popkalab.com

popkalab@gmail.com

Concept

The Hyper membrane is an installation that employs electronic textiles, embodied interactions and artificial intelligence to investigate possible future communications among living beings.

The work revolves around the development of a flexible and light surface that detects and reacts to touch interaction dynamically. The installation aims to couple tactile input with visual output to create an experience that simulates empathy by interpreting the emotional status of the users and (re)adjust its behavior to them.

Through technology, we should be able to detect the behavior of the interaction - its hidden emotions - and change the feedback and visual output accordingly.

Smart fabrics, due to their quality of reacting and adapt to external stimuli, are the ideal material to create emotional rich interactions ( Wensveen et al., 2000) or, in other words, an interaction that relies on emotion manifested through actions. Because it can create more complex and meaningful relations, this type of interaction might occupy a central position in communication mechanisms that are yet to be discovered.

The proposed work aims to give one step more towards the way we might communicate in the future.

To fulfill the needs of an emotionally rich interaction the Interfaces should inform the communication and adapt themselves during the action of the user. The interface, thus, notifies the interaction and at the same time, changes itself according to it in real time. Sensor and actuator layered on top of each other to form a smart structure capable of such interaction.

Would the fabric will be smart enough to know how the user feel? Could a material predict its use and take actions on behalf of the wearer? How can we use those technologies to further advance towards a truly smart textile?

User Experience

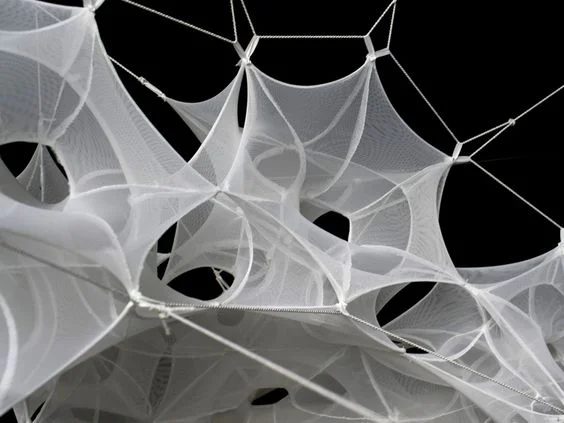

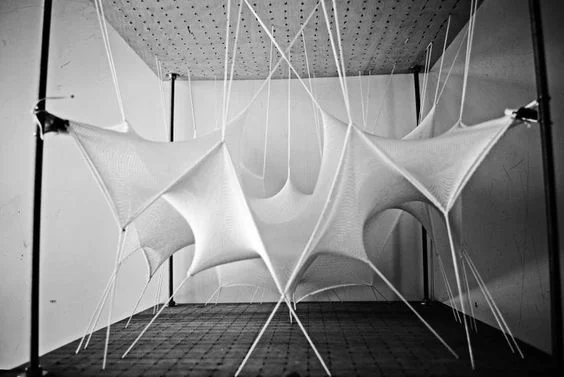

The installation is composed of 2 membranes arranged in space making use of a modular metal structure. The textile membrane should be displayed in such a style that it resembles a neural connection. Both layers are connected wirelessly, like twins that have a psychic bond.

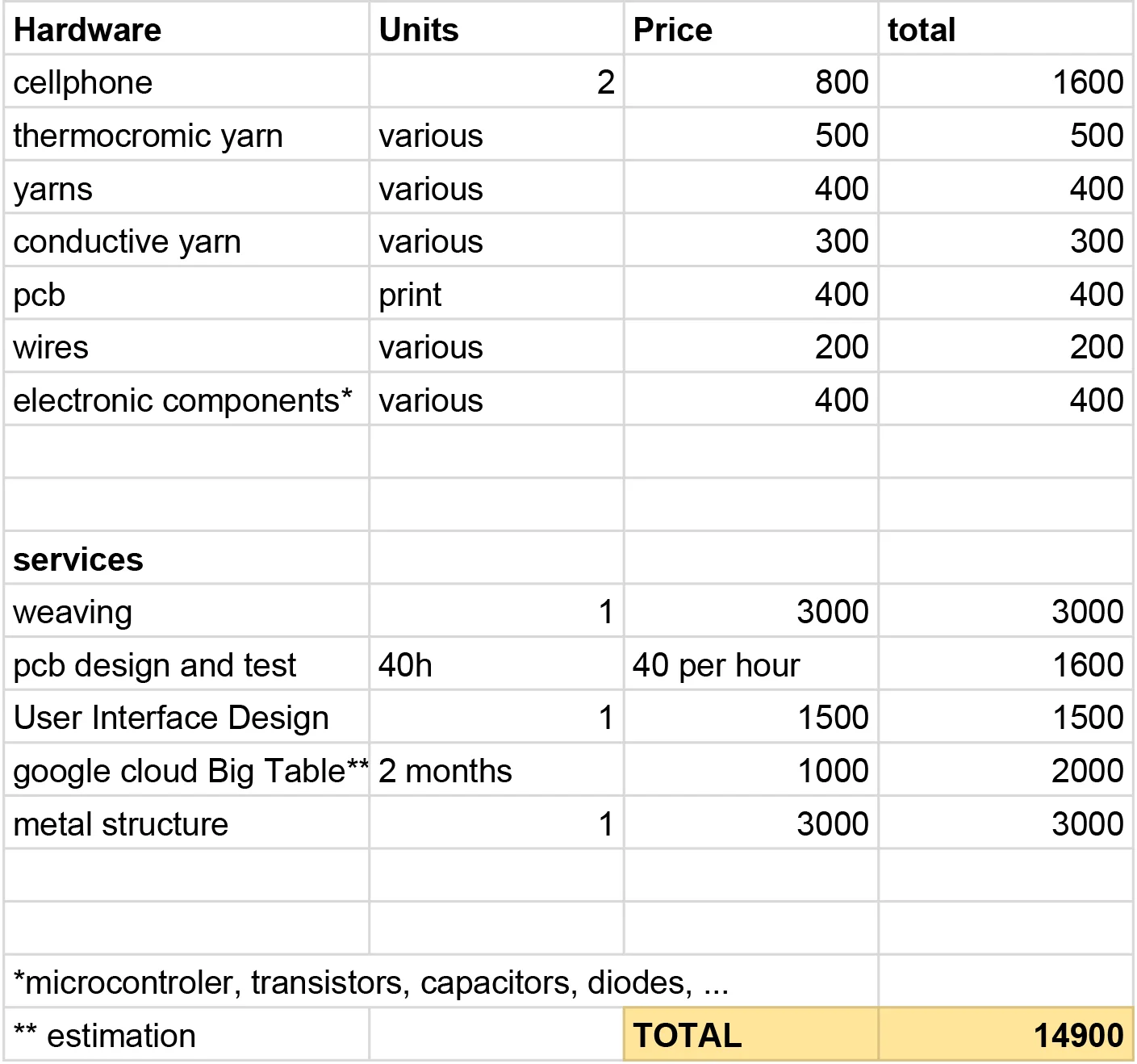

The fabrics are equipped with a sensor system that detects location and type of interaction - using google jacquard technology - practiced by the user. The initial parameters should be speed, stability, and duration of the interaction. To be able to discover those nuances on physical interaction it is essential to identify - using machine learning and artificial intelligence - the emotion a person is having while touching the membrane.

Depending on the detected emotion the visual output should (re)adapt and evolve accordingly to create personalized interfaces that change every moment. Each visualization should match with the mood. Rapid changes for aggressive and stressed and slow for calm and relaxed interaction. This interaction should be further explored during the time of the residency. The visual output is displayed in both membranes.

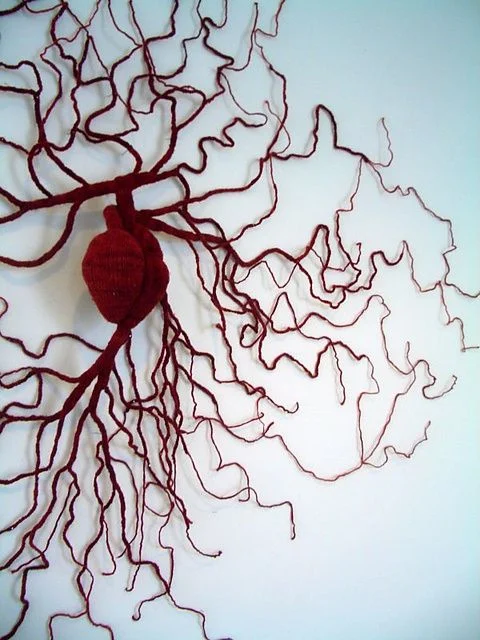

The aesthetics of the visual output is inspired by veins being seen through the skin, neural connections and nature. Flowing from the point of interaction and expanding in the nearby area. Check the mood board for visual references.

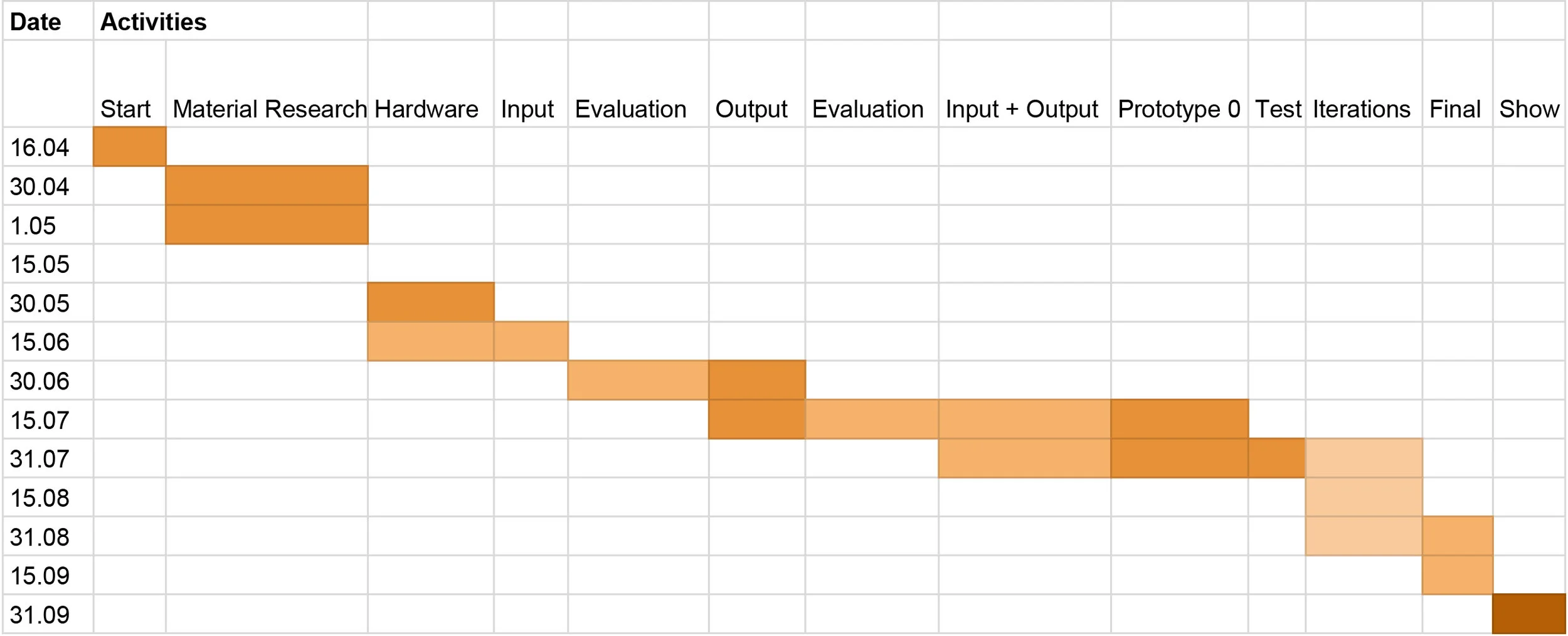

By the end of the residency, this textile interface should be fully developed including the Artificial Intelligent system and all the hardware that will provide the detection and visualization of the interaction. The size of the fabric will vary according to the success of the carried experiments.

Potential applications

This project is an artistic interpretation, but its concept could also be applied to garments, enriching the experience of the user with the adaptative visual feedback.

Wensveen, S., Overbeeke, K., & Djajadiningrat, T. (2000). Touch me, hit me and I know how you feel. Proceedings of the Conference on Designing Interactive Systems Processes, Practices, Methods, and Techniques - DIS ’00, 48–52. https://doi.org/10.1145/347642.347661

This residency with Google is beneficial not only to have the opportunity to use the jacquard technology to create the hardware but also to develop the algorithm using Google technology as well. I could not imagine any better place to carry this project.

I propose the use of a machine learning model to recognize those behaviors and Artificial Intelligence to try to define emotions to them. We could use Tensorflow to create this model.

The visualization should be achieved using yarns dyed with thermochromic ink that changes color according to the temperature. A controlled heating circuit should be built and programmed accordingly. The speed of the visual output can is controlled. However, it is a relatively slow change in comparison with a digital output. This interaction refers to the calm technology concept and here is critically utilized.

This work can potentially point out to new mechanisms that can take advantage of the technology close to the body that can be used in the future. However, it is meant to open possibilities rather than limit the result to solving a specific problem.